Ensure production readiness

This guide takes 10 minutes to complete, and aims to cover:

- Some advanced types of properties that can be added to blueprints, and what can be achieved by using them.

- The value and flexibility of scorecards in Port.

🎬 If you would like to follow along to a video that implements this guide, check out this one by @TeKanAid 🎬

- This guide assumes you have a Port account and that you have finished the onboarding process. We will use the

serviceblueprint that was created during the onboarding process.

The goal of this guide

In this guide we will set various standards for the production readiness of our services, and see how to use them as part of our CI.

After completing it, you will get a sense of how it can benefit different personas in your organization:

- Platform engineers will be able to define policies for any service, and automatically pass/fail releases accordingly.

- Developers will be able to easily see which policies set by the platform engineer are not met, and what they need to fix.

- R&D managers will get a bird's-eye-view of the state of all services in the organization.

Expand your service blueprint

In this guide we will add two new properties to our service blueprint, which we will then use to set production readiness standards:

- The service's

on-call, fetched from Pagerduty. - The service's

Code owners, fetched from Github.

Add an on-call to your services

Port offers various integrations with incident response platforms.

In this guide, we will use Pagerduty to get our services' on-call.

Create the necessary Pagerduty resources

If you already have a Pagerduty account that you can play around with, feel free to skip this step.

-

Create a Pagerduty account (free 14-day trial).

-

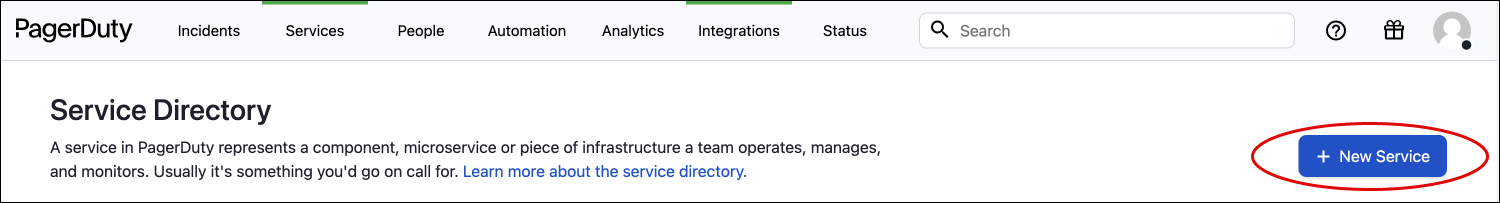

Create a new service:

- Name the service

DemoPdService. - Choose the existing

Defaultescalation policy. - Under

Reduce noiseuse the recommended settings. - Under

Integrationsscroll down and click onCreate service without an integration.

Integrate Pagerduty into Port

Now let's bring our Pagerduty data into Port. Port's Pagerduty integration automatically fetches Services and Incidents, and creates blueprints and entities for them.

To install the integration:

-

Go to your data sources page, and click on the

+ Data sourcebutton in the top-right corner. -

Under the

Incident Managementsection, choosePagerduty. -

As you can see in this form, Port supports multiple installation methods. This integration can be installed in your environment (e.g. on your Kubernetes cluster), or it can be hosted by Port, on Port's infrastructure.

For this guide, we will use theHosted by Portmethod. -

Enter the required parameters:

-

Token - Your Pagerduty API token. To create one, see the Pagerduty documentation.

Port secretsThe

Tokenfield is a Port secret, meaning it will be encrypted and stored securely in Port.

Select a secret from the dropdown, or create a new one by clicking on+ Add secret.Learn more about Port secrets here.

-

API URL - The Pagerduty API URL. For most users, this will be

https://api.pagerduty.com. If you use the EU data centers, set this tohttps://api.eu.pagerduty.com.

-

-

Click

Done. Port will now install the integration and start fetching your Pagerduty data. This may take a few minutes.

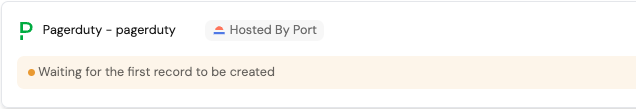

You can see the integration in theData sourcespage, when ready it will look like this:

Great! Now that the integration is installed, we should see some new components in Port:

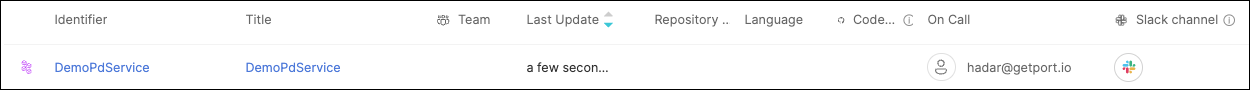

- Go to your Builder, you should now see two new blueprints created by the integration -

PagerDuty ServiceandPagerDuty Incident. - Go to your Software catalog, click on

PagerDuty Servicesin the sidebar, you should now see a new entity created for ourDemoPdService, with a populatedOn-callproperty.

Add an on-call property to the service blueprint

Now that Port is synced with our Pagerduty resources, let's reflect the Pagerduty service's on-call in our services.

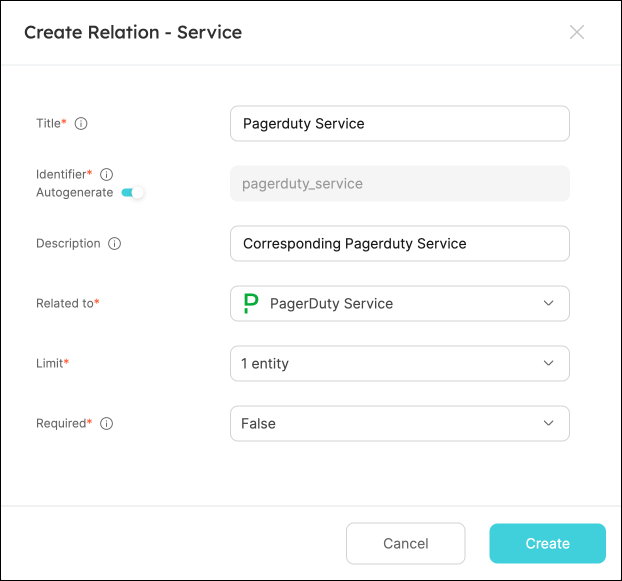

First, we will need to create a relation between our services and the corresponding Pagerduty services.

- Fill out the form like this, then click

Create:

Now that the blueprints are related, let's create a mirror property in our service to display its on-call.

- Choose the

Serviceblueprint again, and under thePagerDuty Servicerelation, click onNew mirror property.

Fill the form out like this, then clickCreate:

- Now that our mirror property is set, we need to assign the relevant Pagerduty service to each of our services. This can be done by adding some mapping logic. Go to your data sources page, and click on your Pagerduty integration:

Add the following YAML block to the mapping under the resources key, then click save & resync:

Relation mapping (click to expand)

- kind: services

selector:

query: "true"

port:

entity:

mappings:

identifier: .name | gsub("[^a-zA-Z0-9@_.:/=-]"; "-") | tostring

title: .name

blueprint: '"service"'

properties: {}

relations:

pagerduty_service: .id

What we just did was map the Pagerduty service to the relation between it and our services.

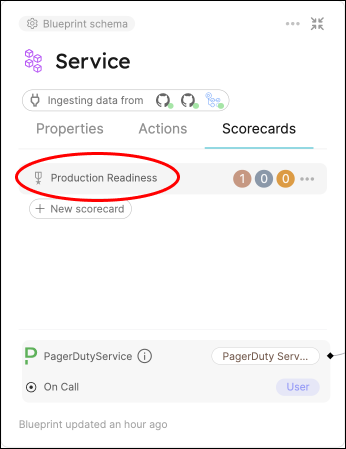

Now, if our service identifier is equal to the Pagerduty service's name, the service will automatically have its on-call property filled: 🎉

Note that you can always perform this assignment manually if you wish:

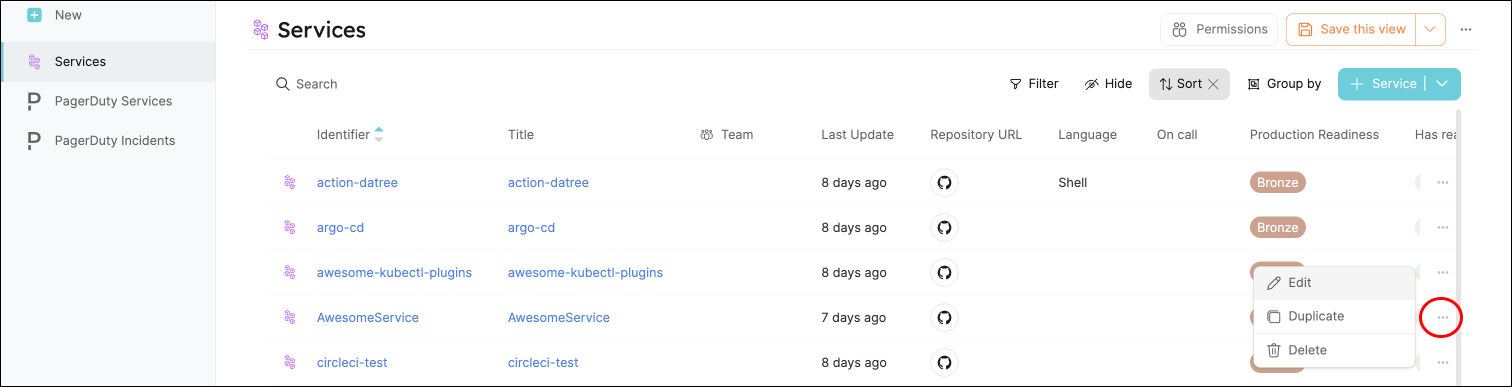

- Go to your Software catalog, choose any service in the table under

Services, click on the..., and clickEdit:

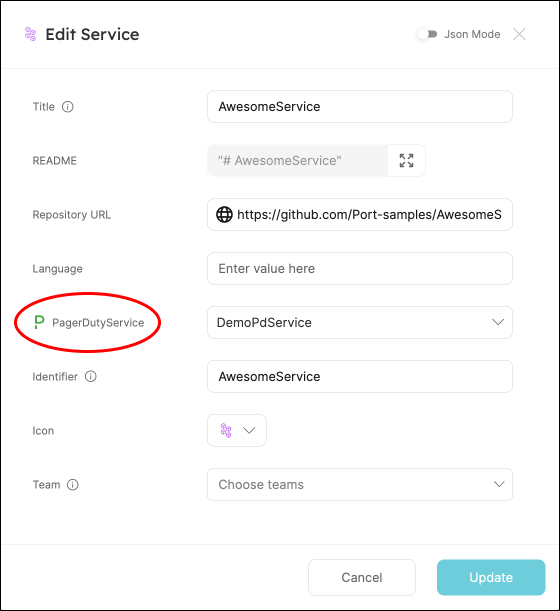

- In the form you will now see a property named

PagerDuty Service, choose theDemoPdServicewe created from the dropdown, then clickUpdate:

Display each service's code owners

Git providers allow you to add a CODEOWNERS file to a repository specifiying its owner/s. See the relevant documentation for details and examples:

- GitHub

- GitLab

- Bitbucket

Let's see how we can easily ingest a CODEOWNERS file into our existing services:

Add a codeowners property to the service blueprint

-

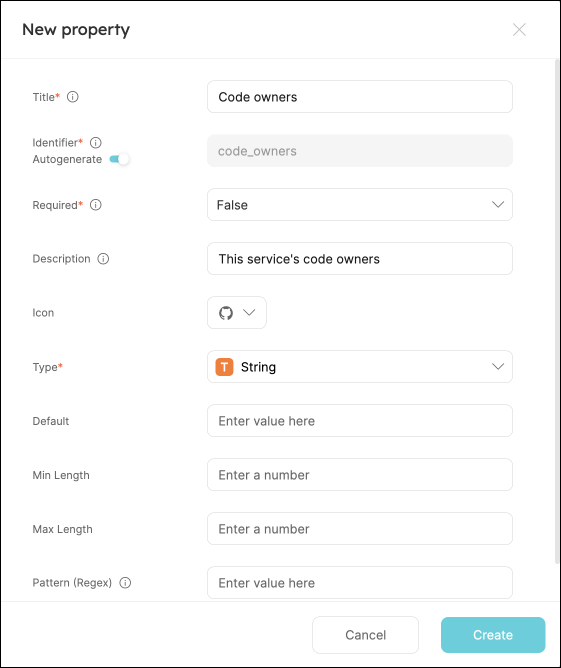

Go to your Builder again, choose the

Serviceblueprint, and clickNew property. -

Fill in the form like this:

Note theidentifierfield value, we will need it in the next step.

-

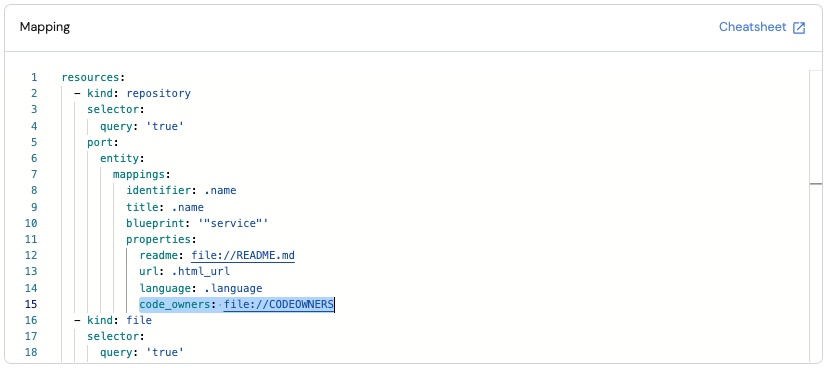

Next we will update the Github exporter mapping and add the new property. Go to your data sources page.

-

Under

Exporters, click on the Github exporter with your organization name. -

In the mapping YAML (the bottom-left panel), add the line

code_owners: file://CODEOWNERSas shown here, then clickResync:

Remember the identifier from step 2? This tells Port how to populate the new property 😎

Going back to our Catalog, we can now see that our entities have their code owners displayed:

![]()

Update your service's scorecard

Now let's use the properties we created to set standards for our services.

Add rules to existing scorecard

Say we want to ensure each service meets our new requirements, with different levels of importance. Our Service blueprint already has a scorecard called Production readiness, with three rules.

Let's add our metrics to it:

Bronze- each service must have aReadme(we have already defined this in the quickstart guide).Silver- each service must have an on-call defined.

Now let's implement it:

- Go to your Builder, choose the

Serviceblueprint, click onScorecards, then click our existingProduction readinessscorecard:

- Replace the content with this, then click

Save:

Scorecard schema (click to expand)

{

"identifier": "ProductionReadiness",

"title": "Production Readiness",

"rules": [

{

"identifier": "hasReadme",

"description": "Checks if the service has a readme file in the repository",

"title": "Has a readme",

"level": "Bronze",

"query": {

"combinator": "and",

"conditions": [

{

"operator": "isNotEmpty",

"property": "readme"

}

]

}

},

{

"identifier": "usesSupportedLang",

"description": "Checks if the service uses one of the supported languages. You can change this rule to include the supported languages in your organization by editing the blueprint via the \"Builder\" page",

"title": "Uses a supported language",

"level": "Silver",

"query": {

"combinator": "or",

"conditions": [

{

"operator": "=",

"property": "language",

"value": "Python"

},

{

"operator": "=",

"property": "language",

"value": "JavaScript"

},

{

"operator": "=",

"property": "language",

"value": "React"

},

{

"operator": "=",

"property": "language",

"value": "GoLang"

}

]

}

},

{

"identifier": "hasTeam",

"description": "Checks if the service has a team that owns it (according to the \"Team\" property of the service)",

"title": "Has a Team",

"level": "Gold",

"query": {

"combinator": "and",

"conditions": [

{

"operator": "isNotEmpty",

"property": "$team"

}

]

}

},

{

"identifier": "hasOncall",

"title": "Has On-call",

"level": "Gold",

"query": {

"combinator": "and",

"conditions": [

{

"operator": "isNotEmpty",

"property": "on_call"

}

]

}

}

]

}

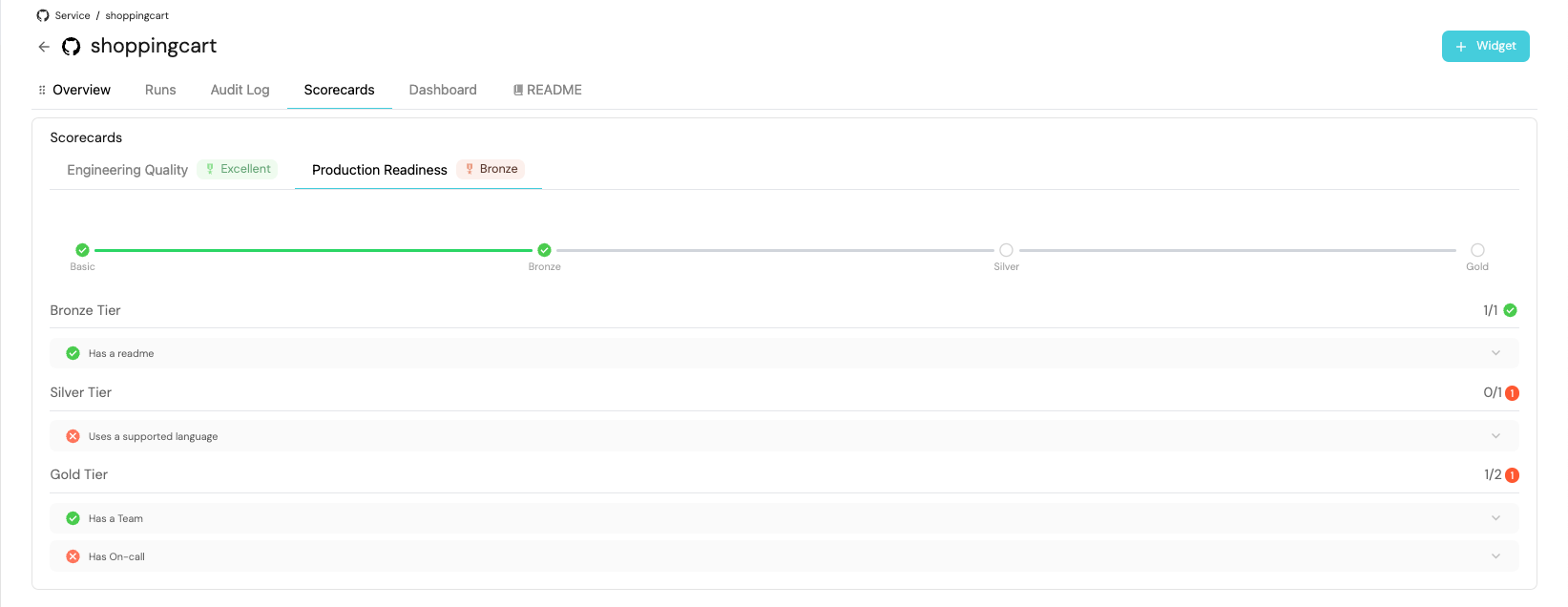

Now go to your Catalog and click on any of your services.

Click on the Scorecards tab and you will see the score of the service, with details of which checks passed/failed:

Possible daily routine integrations

- Use Port's API to check for scorecard compliance from your CI and pass/fail it accordingly.

- Notify periodically via Slack about services that fail gold/silver/bronze validations.

- Send a weekly/monthly report for managers showing the number of services that do not meet specific standards.

Conclusion

Production readiness is something that needs to be monitored and handled constantly. In a microservice-heavy environment, things like codeowners and on-call management are critical.

With Port, standards are easy to set-up, prioritize and track. Using Port's API, you can also create/get/modify your scorecards from anywhere, allowing seamless integration with other platforms and services in your environment.

More relevant guides and examples: